Shaymaa E. Sorour

Dept. of Educational Technology

Faculty of Specific Education Kafrelsheikh University

Egypt

shaymaasorour@gmail.com

Hanan E. Abdelkader

Dept. of Computer Teacher Preparation

Faculty of Specific Education

Mansoura University

Egypt

h_elrefaey@yahoo.com

Abstract—This research focuses on understanding student performance by giving automatic feedback after writing freestyle comment data in each lesson. Writing comments express students’ learning activities, tendencies, attitudes, and situations involved with the lesson. Random Forest and Support Vector Machine were applied to analyze the students’ prediction results. Also, a Majority Vote (MV) method is employed in consecutive lessons during the semester to the predicted results. The proposed system tracks student’s learning activities and attitudes with different courses and provides valuable feedback to improve educational performance.

Keywords—Automatic feedback, Comment data, SVM, RF, MV

I. INTRODUCTION

Improving the performance of students, determining their actual progress, and enhancing their learning process can be extremely valuable in the educational environment. To achieve a high level of student performance, we have to find ways to measure their current progress and predict the results of the learning process at the earliest stages. It can be exceedingly vital to control and handle the difficulties from the beginning [1,2] Academic analytics deserves proper attention because it supports educational institutions in enhancing student accomplishment [3]. The prediction of students’ performance becomes possible because of the massive amount of educational processes data [4]. Students’ performance is the most critical goal for higher educational institutions. Where the university’s goal quality can be measured based upon its scores on academic achievements, that is considered the essential criteria. The previous literature stated that many define student performance by gains made by measuring their learning estimation [3]. Although, many studies consider students’ success measurement at graduation, where they are evaluating the performance of students using the final grades. Final grades contain the structure of courses, reports, activities, and final exam marks. The evaluation assists in the maintenance of the effectiveness and performance of the students’ learning processes. Also, through analyzing students’ performance, strategic programs can be perfectly designed over their studies time [5].

Evaluating students is the most effective method that precedes teacher evaluation at educational institutions. Two types of questions to introduce to the students: the fixed-ended questions and open-ended questions. [6] The fixed-ended questions do not allow the respondent to provide unique or unanticipated responses, but the students must choose from specified options. Open-ended questions allow students to give any answer they want without forcing them to select from concrete options. So open-ended questions give freedom in response and are uncommitted to match the standard answer categories. Besides, students’ answers, “freestyle comments” can take many expressions and provide valuable information [7]. Students’ comments reflect the complication of learning strategies, and the reaction to teaching environments provides additional beneficial information about essential educational issues and suggests excellent views to develop them [8-10]. Students’ comments analysis can provide useful ideas for teaching aspects and courses that can benefit students [11,12].

Currently, there are many proposed techniques for extracting useful information from student comments. Text mining (TM) is one of the most common methods to analyze comments and had been widely applied in educational environments. The text mining performs many processes such as it handles unstructured input, extracts significant numeric indices from the text, and prepares the information contained in the text to access data mining algorithms such as statistical and machine learning. Data can be extracted from the document’s words, the words can be analyzed and determine the similarities between documents and words [13,14].

In the educational context, feedback achieves the critical role of limiting the gap between students’ actual level and targeted findings [15]. Immediate and useful feedback can assist students in realizing and correctingmistakes, encourage them to obtain knowledge, and increase their motivation towards learning and achievement [16]. Automatic feedback has an essential role in informing the students of and providing the mechanism to correct their mistakes. It assists in modifying the learner’s behavior by evaluating its results. Automatic feedback also plays a severe role in the self-learning process; it supports and motivates students, contributes to increasing scientific learning efficiency, improves learning quality and speed [17,18]. Also, automatic feedback presents opportunities for students to enhance their performance by quick actions based on writing freestyle comments data from the proposed system.

In this paper, we built a system to collect comment data, provide automatic feedback to each student, and examine more applicable students’ prediction performance development models. There are particular student features related to the student success rate. Detecting the relationships between each student’s task and their accurate score is one of the efficient applications of student’s performance. Besides, automatic feedback could assist in enhancing students’ learning process through the semester [19, 20]. For more contributions to understanding students individually in the class, this research introduces an analysis of student comments to grasp students’ status and attitudes. Also by predicting their final grades and providing automatic feedback for each student to follow the anticipated results in the consecutive lesson.

II. RELATED WORK

In this section, the studies that present the automatic feedback were addressed in more detail as an essential tool to evaluate and guide the student for more progress at their educational issues. Besides, the importance of detecting students’ performance at the earlier stage and predict their performance using data mining and text mining techniques were displayed. Zenobia et. al [21] aimed at helping nurse educators /academics realize the student’s expectations and views displaying their feedback to educators about teaching performance and quality of the subject. There were four essential subjects about collecting feedback at more than one time; adjusting the questions being asked to students for collecting feedback; determining the collecting feedback methods efficiency and presenting the next step after student feedback. They approved the importance of examining the issue from the student opinion exploring the collecting feedback timing and channels and displaying the preferred uses of student feedback. Further, [22] provided automated feedback depend on the analysis of text mining to decrease the plagiaristic behavior of learners’ online assignments. Analysis of document similarity had performed twice at the middle and end of the semester about the computer science concepts course. The results of the analyses detected a statistically significant difference in the plagiarized posts ratios and the ratios of students performing plagiaristic behavior before and after feedback. In addition, because of huge amounts of data that the E-learning systems generate, whose analysis became a terrible job, which makes it serious about utilizing computational analytical techniques. [23] proposed using knowledge discovery techniques for analyzing historical course grade data of students to predict the probability of students drop out of a course. The significant contribution was a tutoring plan that could be applied by the learning institutions (and others) to decrease the dropout rate in e-learning courses.[24] used data mining methods to study the undergraduate student’s performance where the data mining domain assists to mine educational data for improving the educational process quality. Two students’ performance aspects had been concentrated upon. Firstly, predicting academic achievement. Secondly, studying and combining typical progressions with prediction results. Low and high achieving students’ groups had been identified. The results proved that it is the potential to give immediate warning and support to low achieving students, give advice and chances to high performing students, by centering on a small number of courses indicates good or bad performance. [25] approved that feedback could be presented to learners depends on comparable solutions from the asset of stored examples. They present a framework to build metrics on sequential data and using machine-learning techniques to adapt to those metrics. The adaptable metric recovers the wrong versus correct learner attempts from sports training in a simulated data set, the underlying learner strategy classification in a real Java programming dataset. [26] detected the effect of applying blended learning (BL) on higher education student’s academic achievement. Statistical synthesis of studies comparing student performance in BL with traditional classroom instruction was performed. The finding emphasizes that BL is significantly associated with better learning performance than with traditional classrooms. Therefore, to improve student performance, there was discussion around the implications and returns for future research. [10] indicated that comprehensive research had been executed on student ratings on closed-ended questionnaires, research has been executed on student ratings on closed-ended questionnaires, but there is a lack of research that has examined the responses of students to open-ended questions. They inspected the students’ written comments in 198 classes, based on their frequency, content, orientation, and consistency with quantitative ratings on closed-ended items. These comments were directed to be positive, not negative, and tended to be general rather than specific. The new automated scoring techniques applications’, like natural language processing and machine learning, enable automated feedback on students’ short written responses. Although numerous researches approved the automated feedback in the computer-mediated educational environments [27]. Feedback Properties in various studies treat the feedback timing, and according to timing, feedback can be immediate or delayed. In the educational context, immediate feedback was commonly more efficient than delayed feedback [15,28]. Immediate feedback can discover and evaluate mistakes before students fully understand academic conceptions [29]. The immediate feedback utility would be much more in computer-based educational climate feedback that can be reached to each student relying on their performance [15,30].

Previous studies approved that feedback is valuable in supporting learning, and it is not easy to provide students’ automatic feedback. Also, writing comments data that express student’s attitudes and knowledge has vital rules to improve student performance.

III. BACKGROUND

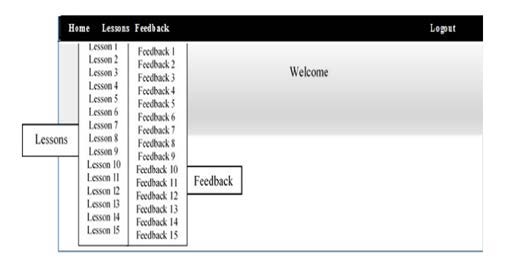

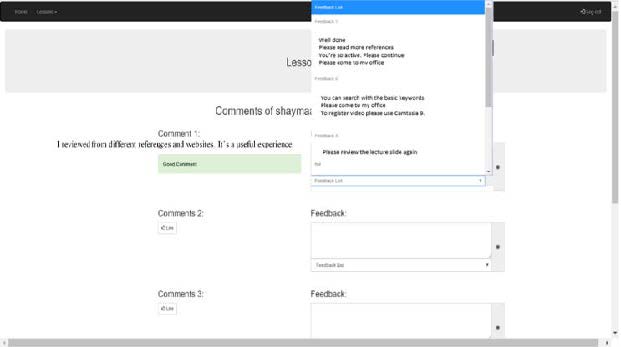

Giving feedback to students after each lesson has a vital role in improving their performance. The proposed system collected comment data for 15 lessons automatically by providing an online website, as shown in “Fig. 1”. The proposed system provided some instructions to improve students’ writing skills and extract significant assignments to supply common and comprehensive instructions in each lesson during the semester. “Fig. 1” shows the system interface after signing in. It presents 15 lessons and 15 feedbacks to write comments and provide feedback after writing comments.

Fig. 1 System interface that includes lessons and feedback

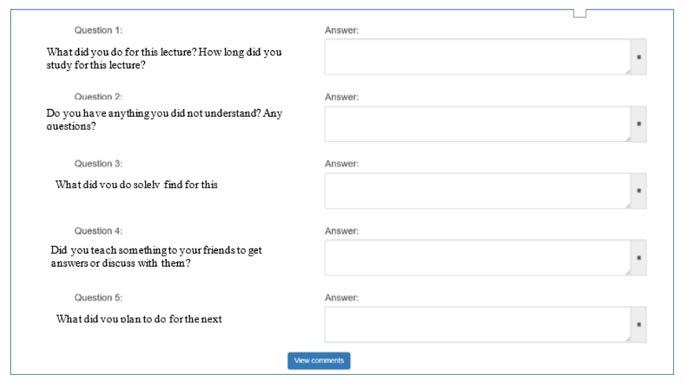

Fig. 2. Five questions and answers

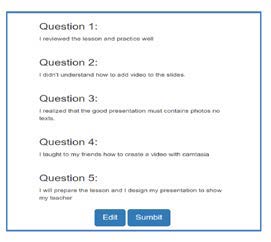

Comments data were collected from the students’ answers on five questions, as shown in “Fig. 2” wherefore students could express their educational situation. Writing comment data reflect students’ knowledge, activities, attitudes, and tendencies for each lesson. Students think back, find out their problems to improve their learning, and the teacher displays some counsels that make students adequately depict their comments. Question (1): which are “Review the lesson.” Students illustrate their achievements of the previous lecture. Question (2): Each student writes the understanding or difficult points for the current lecture. For question (3): students describe what they detected and recognized information and the efforts they made for the lesson. In (Q4): Students express the cooperative activities sharing their friends for solving problems. Question (5): about the “Next plan.” Students indicate their next outline before the next lecture. Students can view and edit their comments before submitting them, as shown in “Fig. 3”.

Fig. 3. View comments to submit them

IV. SYSTEM OVERVIEW

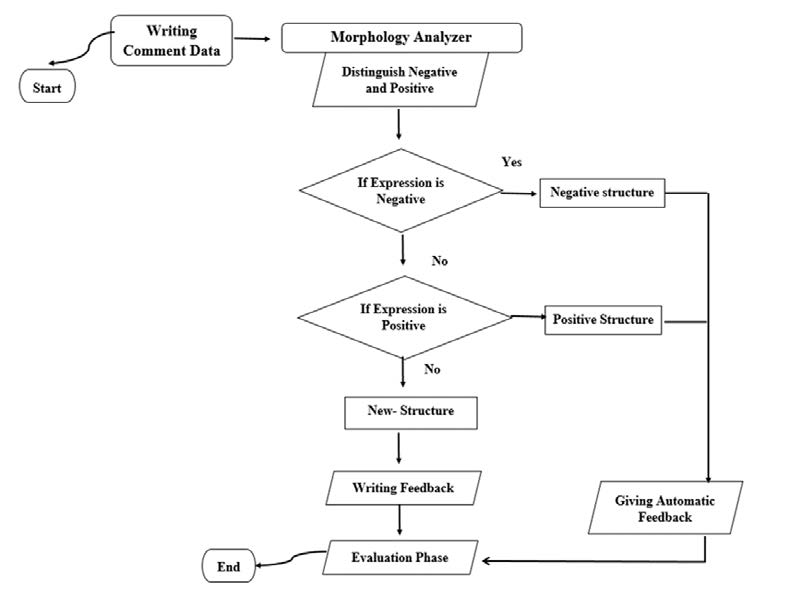

“Fig. 4” shows the proposed system overview from collecting high-quality students’ comments data, predicting student performance, providing automatic feedback in order to improve students’ performance.

Fig. 4. System Flowchart

Students write their comments in the English language after each lesson, as shown in “Fig.” 1. 122 undergraduate students at the Faculty of Specific Education, Department of Educational Technology. Specialization of Computer Teacher Preparation, from Kafrelsheikh University, Egypt. Students” took Multimedia and Applied Statistics courses that consisted of 15 lessons. They registered the system and assigned in by their emails. They write comment data after each lesson where the proposed system provides editing property before submitting comment data. Students can edit their comments before submitting them. The system sent emails to all students that contain their comments.

A. Students’ Levels

Students’ marks were classified into five levels, as shown in Table 1, the students’ grades, many students, and students’ degrees. The evaluation process is considered the rate of attendance and the average mark of student’s exercises and reports for six assignments, which covered the subjects from lessons 1 to 4, lessons 1to 6, lessons from 1 to 8, lessons 1 to10, lessons, lessons 1 to 15.

Table 1. Number of Students’ Level

| GRADE | NUMBER OF STUDENTS | DEGREE |

|---|---|---|

| A | 70 | 100-85 |

| B | 20 | 84-75 |

| C | 20 | 74-70 |

| D | 8 | 69-50 |

| E | 4 | 49-0 |

Students’ number of level D and level E is smaller than other levels for predicting their grade, so comment data in levels D and E were combined in one level D.

B. Students’ Groups

In this research, we classified students into two groups:

- Group 1 (comment data only): Students write their comments after each lesson

- Group 2 (giving feedback): The system provided automatic feedback to each student after writing feedback in each lesson from 1 to 15.

C. Morphology Analysis

The proposed system analyzed students’ comments automatically utilizing the TF- IDF method with NLTK by extracting parts of speech of the words (e.g., noun-verb – adverb and adjective). TF: refers to a word frequency and expression in a text comment and IDF: indicates to the number of comments in the corpus divided by the number of comments that the word or expression appears in terms with higher. In addition, the system applied an Attribute Dictionary (AD), which express positive and negative expressions automatically from students’ comments. First, the system concentrated on verbs and negative auxiliary verbs; in English, did not is commonly used to display negative verbs for past tense, did not for present tense.

The system stems from the negative auxiliary verb. Then extracts the phrases before verbs till subjects. Furthermore, it extracts positive verbs and the whole phrases that contain higher frequencies of positive. A value of an attribute assigned to the extracted words for the student’s in each question to build an automatic AD; the value is one of Positive (Pos.), Missing (Mis.), and Negative (Neg.). Positive attitudes such as: (do the best, attractive, able to, manage to, can do). Negative attitudes such as: (cannot do, confused, afraid, unable to, frustrated). Words and expressions with high frequencies were extracted from all lessons that express students ‘attitudes toward the lesson. Then one of the three value (Pos, Mis, or Neg) were specified to predict student performance.

This research aim is to focus on verbs (negative/positive) with (5) questions from lessons 1 to lesson 15 to assign the attribute. Students’ comments were translated into positive or negative attributes. However, some of the students described their comments without writing the verbs. So, other extracted words were checked to allocate the correct attribute.

D. An Advisory System

To build an advisory system, automatic feedback was provided to each student from the first lesson to each student according to the positive and negative expressions. If students’ comments did not contain positive or negative expressions, the teacher could give feedback by himself, and the system saved all feedbacks to each question to use them again with other lessons. “Fig.5” shows how to give feedback to students.

Fig. 5 Add useful comments and save all feedback on the list.

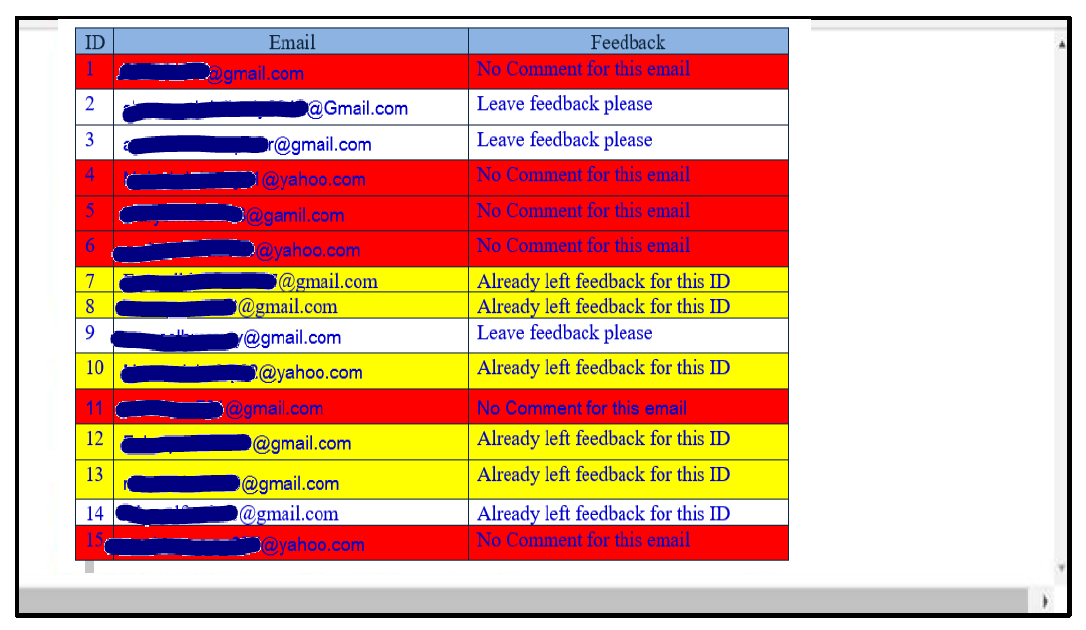

Our system can distinguish between different cases, as shown in “Fig. 6” who write their comments and had feedback with yellow color. Who did not write their comments in red color? Who write their comments, and the system did not provide feedback with gray color; the system needs help from the teacher to add unique feedback?

In order to motivate students to write comment data that express their attitudes and the current situation, the system can select a useful comment, and all students can see this comment and who write it as shown in “Fig. 5”. Also, the system saves all feedback from all students. The teacher can select from them to add feedback, as shown in “Fig.5”.

Realizing how to use the previous feedback, our system calculated the feedback used from the previous lessons and the new feedback used in the current lesson, as shown in Fig. 7. From lesson 7, the system did not count any further feedback. It depends on the previous feedback.

Fig. 6. The system can distinguish between different students who write comments and have feedback

E. Prediction Models

In this study, two experimental groups were classified in each course. The first group students writing freestyle comments data, and the second group, the automatic feedback was given after each lesson. SVM and RF models are applied to predict student performance, and the Majority Vote method was applied to the attribute vectors from students’ comments to develop the prediction.

a. Support Vector Machine

SVM is a supervised learning model that aims to analyze and utilize data for classification and regression analysis, additional randomness to the model while growing many trees. It searches for the best feature among a random subset of features. In our research, the best results for all the lessons were obtained using 10 trees to compose RF for 15 lessons [31] SVM trains algorithm, creates a model that allocates different examples to any of the groups, forms it as a non-probabilistic binary linear classifier. New examples are then predicted and mapped into the similar. By comparing SVM with other machine learning techniques, as Artificial Neural Networks, SVM has a better implementation. So, SVM has achieved perfect performance in several predictive data mining applications such as text categorization, time series prediction, and pattern recognition, etc. [32].

b. Random Forest

RF algorithm is a classifier of reference. It forms the majority of current machine learning systems, and its success is based on creating the forest with several trees that accurate the prediction results. Random Forest adds [33].

V. EVALUATION METHODS

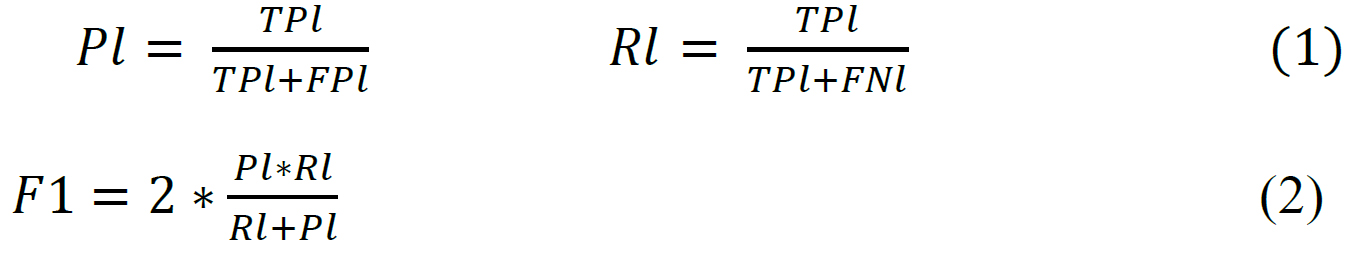

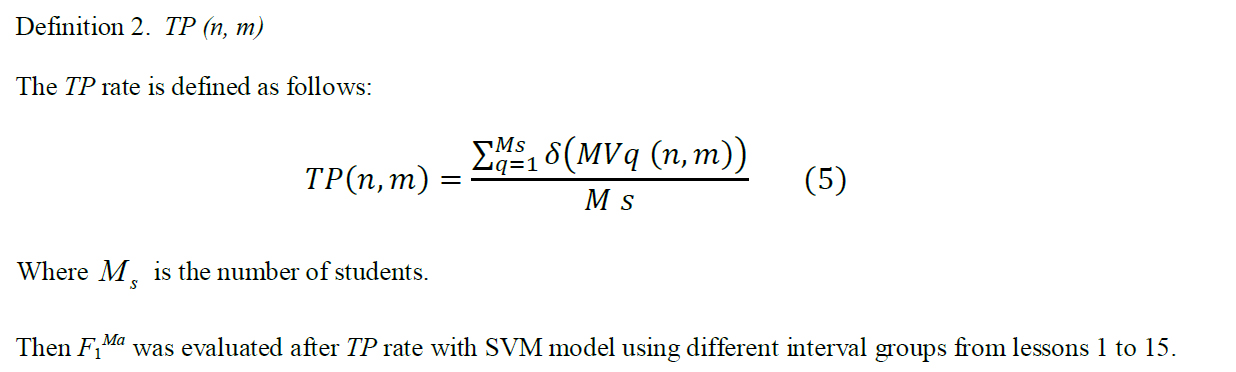

A10-fold cross-validation was applied to predict student performance from lesson 1 to 15. Let (L) a set of students. Students’ levels are {A; B; C; D}. Where TP refers to true positive (TN) refers to true negative (FP) refers to false positive, and (FN) refers to a false negative. To evaluate the proposed methods, students’ comments were measured by F- measure (F1); it is the mean of Precision (Pl) and Recall (Rl). The overall F1 result can be calculated by macro-average (F1Ma) [34].

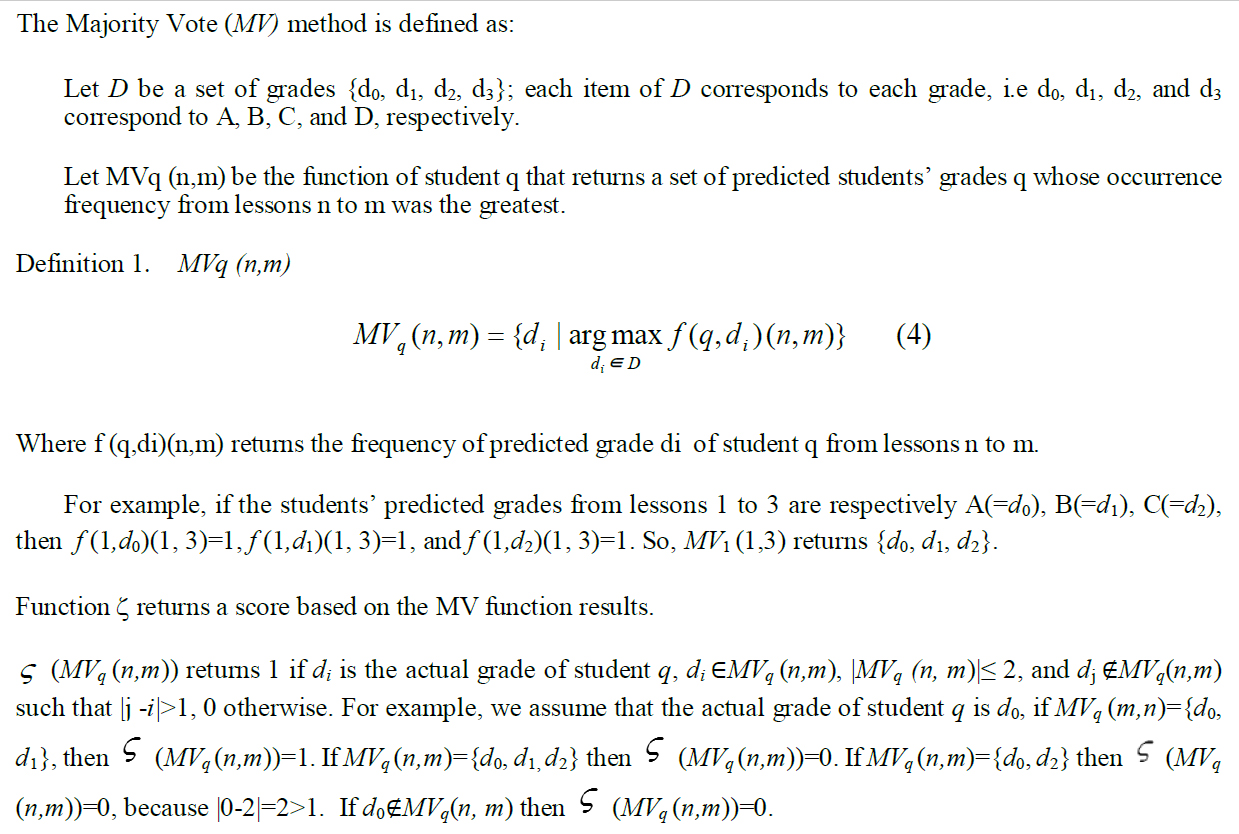

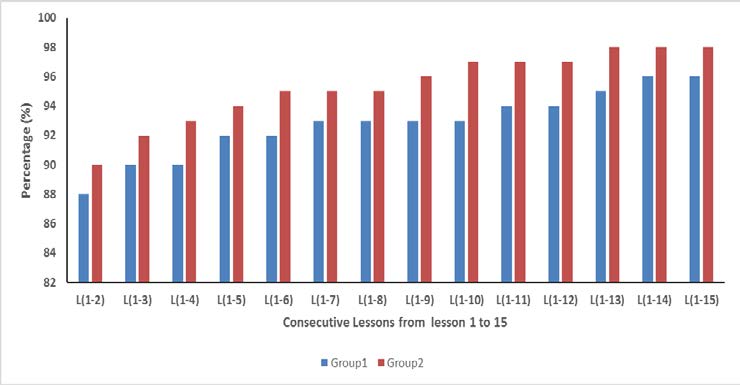

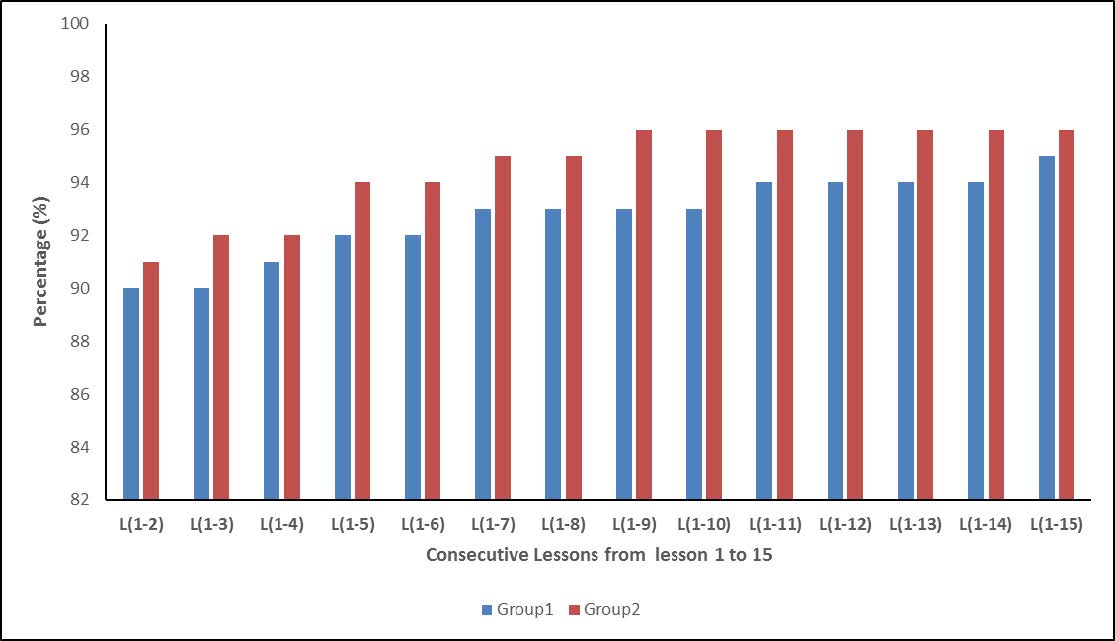

A. Predict the Student’s in Consecutive Lessons

The goal of this part in two ways: First, to show the effect of writing comment data during the semester in two courses, which are different to grasp students’ characteristics in consecutive lessons. Second, to focus on the feedback roles in a range of lessons; to understand students’ learning situations and attitudes. The Majority Vote method is applied [35] for predicting students’ performance in consecutive lessons.

VI. RESULTS

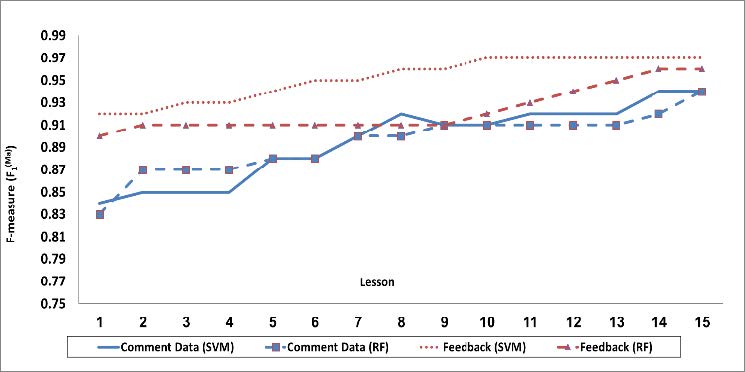

The results were performed in two ways. First, to conduct the comparison of RF model with that of SVM model to predict student performance in two different courses. Second, to test the effect of automatic feedback method in consecutive lessons during the semester.

Fig. 8. Overall F1 (Ma) results for each

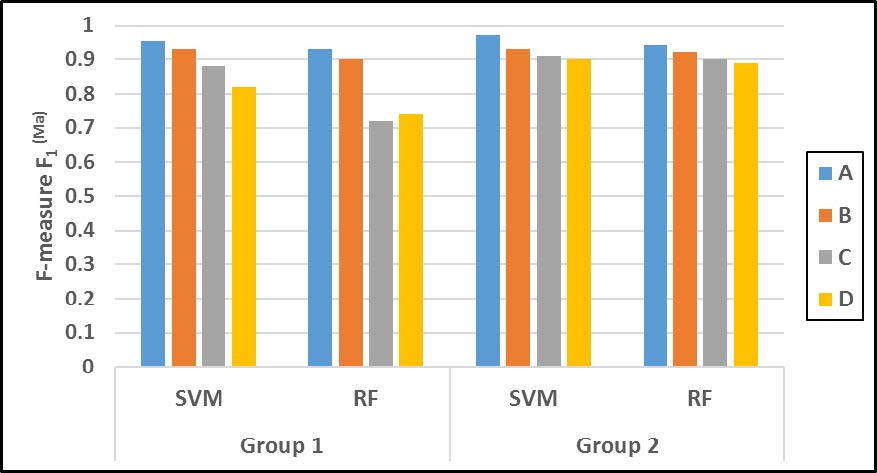

Fig. 9 . Overall F1 (Ma) results for each level

Fig. 10. F-measure (F1) from lessons 1 to s for Multimedia Course

Fig. 11. F-measure (F1) from lessons 1 to s for Applied Statistics Course

A. SVM and RF Prediction Results

Fig. 8 shows the F1Ma results of final student grades for the Multimedia course with the RF and the SVM models using comment data only (Group1) and after giving feedback to students (Group2). SVM model had the best prediction results for all lessons than the RF model. The average overall F1Ma results were 0.90 and 0.89 for SVM and RF for Group 1 and 0.95 and 0.92 after giving feedback to students (Group2). For the Applied Statistics course, the average overall F1Ma results were 0.89 and 0.87 for SVM and RF for Group 1, 0.94, and 0.92 for Group2.

B. The Characteristics of Students’ Grades Between the Two Groups

Tables 2, 3, and “Fig. 9” show the differences in students’ grades in two groups for the Multimedia course using RF and SVM models. SVM achieved the highest results. Also, there is no difference between grades (A, B, C, D) on prediction performance.

Table 2. Overall Prediction Results for Group 1 (Comment data only)

| GRADE | RECALL | PRECISION | F-MEASURE |

|---|---|---|---|

| A | 0.98 | 0.91 | 0.94 |

| B | 1.00 | 0.90 | 0.95 |

| C | 0.93 | 0.83 | 0.87 |

| D | 0.91 | 0.79 | 0.85 |

Table 3. Overall Recall, Precision and F-measure results for Group 2 (give feedback)

| GRADE | RECALL | PRECISION | F-MEASURE |

|---|---|---|---|

| A | 0.98 | 0.96 | 0.97 |

| B | 1.00 | 0.93 | 0.96 |

| C | 0.91 | 0.96 | 0.94 |

| D | 0.89 | 0.96 | 0.93 |

VII. CONCLUSION

This paper discussed a proposed system which connects with freestyle comment data and automatic feedback to understand students’ attitudes, activates, treat actual gap, realize the current situation, and increase motivation. The proposed system adds some features to collect high-quality comment data that present the current situations in all lessons with different courses. SVM and RF were used, and the prediction results (F-measure) were higher for the two groups mainly from the last 7 lessons. Also, Group2 (giving feedback) achieved the best results from all lessons for the two courses. To improve the prediction results in consecutive lessons, the Majority Vote method was applied to the two groups.

To provide feedback, positive and negative expressions were extracted by distinctive words for each question. Furthermore, other attributes from students’ comments were treated to handle more profound semantic terms and provide valuable feedback that enhances students’ performance. In the near future, we will try other methods that investigate such words in all courses that are clues to improve students” performance and overcome their educational problems.

REFERENCES

[1] Carlos V., et al (2017) Improving the expressiveness of black-box models for predicting student performance, Computers in Human Behavior, 72, 621-631.

[2] Berland, M., Baker, R. S., & Blikstein, P. (2014). Educational data mining and learning analytics: Applications to constructionist research. Technology, Knowledge and Learning,19,205-220.

[3] Usamah, b. M., Buniyamin, P. M. Arsad, & Kassim,R. (2013), An overview of using academic analytics to predict and improve students’ achievement: A proposed proactive intelligent intervention, in: Engineering Education (ICEED), IEEE 5th Conference on, IEEE, 126–130.

[4] Mohamed.,A. S.,Husaina,W., & Nuraini, A. R., (2015). A Review on Predicting Student’s Performance using Data Mining Techniques, The Third Information Systems International Conference, Procedia Computer Science,72,414 – 422.

[5] Ibrahim,Z., & Rusli,D. (2007), Predicting students’ academic performance: comparing artificial neural network, decision tree and linear regression, in: 21st Annual SAS Malaysia Forum, 5th September.

[6] Arnold, I. J. (2009). Do examinations influence student evaluations? International Journal of Educational Research,48(4), 215-224.

[7] Brock, B., Van Roy, K., & Mortelmans, D.,(2012). The student as a commentator: students’ comments in student evaluations of teaching, International Conference on Education and Educational Psychology (ICEEPSY 2012), Procedia – Social and Behavioral Sciences, 69, 1122 – 1133.

[8] Sorour, S. E., Mine, T., Goda, K., & Hirokawa, S. (2015). A predictive model to evaluate student performance. Journal of Information Processing (IPSJ), 23(2), 192-201.

[9] Johnson, B., & Christensen, L. B. (2014). Educational Research: Quantitative, Qualitative, and Mixed Approaches: Sage Publications.5th.Ed. Library of Congress Cataloging-in-Publication Data, United States of America.

[10] Alhija, F. N.-A., & Fresko, B. (2009). Student Evaluation of Instruction: What Can Be Learned from Students’ Written Comments? Studies in Educational Evaluation, 35(1), 37-44.

[11] Hodges, L. C., & Stanton, K. (2007). Translating Comments on Student Evaluations into the Language of Learning. Innovative Higher Education, 31(5), 279-286.

[12] Oliver, B., Tucker, B., & Pegden (2007). An investigation into student comment behaviors:Who comments, what do they say, and do anonymous students behave badly? Paper presented at the Australian Universities Quality Forum, Hobart.

[13] Agrawal,R., & Batra.,M (2013), A Detailed Study on Text Mining Techniques, International Journal of Soft Computing and Engineering (IJSCE), ISSN: 2231-2307, 2(6).

[14] Arpita G., & Anand S. (2015). Sentimental Analysis Techniques for Unstructured Data, International Journal of Advanced Computational Engineering and Networking, ISSN: 2320-2106, 3(9).

[15] Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research,78(1),153–189, https://doi.org/10.3102/0034654307313795.

[16] Epstein, M. L., Lazarus, A., Calvano, T., Matthews, K., Hendel, R., & Epstein, B. (2010). Immediate feedback assessment technique promotes learning and corrects inaccurate first responses. The Psychological Record, 52(2), 187–201.

[17] Cheng,G. (2017): The impact of online automated feedback on students’ reflective journal writing in an EFL course, The Internet and Higher Education, 34,18–27.

[18] Shermis, M. D., & Burstein, J. (2013). Handbook of automated essay evaluation: Current applications and future directions. New York: Routledge. Publisher: Routledge, Informa Ltd Registered in England and Wales. Registered Number: 1072954 Registered office: 5 Howick Place, London SW1P 1WG, UK

[19] Natek, S. k., & Zwilling, M. (2014). Student data mining solution–knowledge management system related to higher education institutions. Expert Systems with Applications,41,6400-6407.

[20] Romero, C., & Ventura, S. (2013). Data mining in education. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 3(1), 12-27.

[21] Zenobia, C.Y. Chana, Stanley, D.,J.,Robert J. M.,& Chiena,W.,T.(2017) A qualitative study on feedback provided by students in nurse education, Nurse Education Today, 55, 128–133.

[22] Akçapınar,G. (2015). How automated feedback through text mining changes plagiaristic behavior in online assignments, Computers & Education 87,123-130.

[23] Burgos,C., Campanario,M., Peña,D., JuanA. Lara, Lizcano,D., & Martínezv,M.,A.(2017). Data mining for modeling students’ performance: A tutoring action plan to prevent academic dropout, Computers and Electrical Engineering, 113, 1–16.

[24] Asif,R., Merceron,A., Ali,S.,A., & Haider,N.,G.(2017). Analyzing undergraduate students’ performance using educational data mining, Computers & Education, 113, 177-194

[25] Benjamin, P. n, Bassam, M.,& Barbara, H. (2016). Adaptive structure metrics for automated feedback provision in intelligent tutoring systems, Neurocomputing,192,3–13

[26] Hien M. , Zhu.C., & Nguyet A. D. (2017), The effect of blended learning on student performance at course-level in higher education: A meta-analysis, Studies in Educational Evaluation, 53 ,17–28

[27] Zhua , M.,Liua,O.,L., & Lee,H.(2020). The effect of automated feedback on revision behavior and learning gains in formative assessment of scientific argument writing, Computers & Education, 143, 112-126.

[28] Anderson, T., Rourke, L., Garrison, D. R., & Archer, W. (2001). Assessing teaching presence in a computer conferencing context. Journal of Asynchronous Learning Networks, 5(2), 1–17. https://doi.org/10.24059/olj.v5i2.1875.

[29] Dihoff, R. E., Brosvic, G. M., & Epstein, M. L. (2003). The role of feedback during academic testing: The delay retention effect revisited. Psychological Record, 53,533–548.

[30] Chen, X., Breslow, L., & DeBoer, J. (2018). Analyzing productive learning behaviors for students using immediate corrective feedback in a blended learning environment.

[31] Ghaddar,B.,&Naoum,S.,J.(2018). High dimensional data classification and feature selection using support vector machines, European Journal of Operational Research,265, 993–1004

[32] Panjaa,R., & Nikhil R. (2018) MS-SVM: Minimally Spanned Support Vector Machine, Applied Soft Computing, 64,356–365

[33] Abellán,J. Mantas,C., Castellano,J., & Moral,G.,S.(2018). Increasing diversity in random forest learning algorithm via imprecise probabilities, Expert Systems With Applications, 97,228–243.

[34] Yang, Y., & Liu, X. (1999). A re-examination of text categorization methods, Proceedings of the 22nd annual international ACM SIGIR conference on Research and development in information retrieval,pp. 42-49.

[35] Luo, J., Sorour, S. E., Goda, K., & Mine, T. (2015). Correlation of grade prediction performance with characteristics of lesson subject, Proceedings of the IEEE 15th International Conference on Advanced Learning Technologies, 247-249. doi: 10.1109/ICALT.2015.24.

BIOGRAPHICAL INFORMATION

Dr. Shaymaa E. Sorour, PhD

Assistant Professor, Computer Science and Education, Department of Educational Technology, Faculty of Specific Education

Kafrelsheikh University

Egypt

She is an assistant professor of Computer Science and Education, Department of Educational Technology, Faculty of Specific Education, Kafrelsheikh University, Egypt. She is a Director of the Quality Assurance Unit – Faculty of Specific Education – Kafrelsheikh University. She received her PhD 2016, in Computer Science and Education, Department of Advanced Information Technology, Faculty of Information Science and Electrical Engineering, Kyushu University, Japan. Her Specialization are Computer Science – Artificial Intelligence – Machine Learning Algorithms. She received the best paper awarded in 5th IIAI International Congress on Advanced Applied Informatics, July 2016.

Dr. Hanan E. Abdelkader, PhD

Assistant Professor, Computer Science and Education, Department of Computer Teacher Preparation, Faculty of Specific Education

Mansoura University

Egypt

She is an assistant professor of Computer Science and Education, Department of Computer Teacher Preparation, Faculty of Specific Education, Mansoura University, Egypt. She received her PhD, in 2013 in of Computer Teacher Preparation Department , Faculty of Specific Education, Mansoura University. She is a Certified instructor at the Professional Academy for Teachers – Ministry of Education – Egypt – from 1/10/2017. Humanities Sector Coordinator at the Office of Vocational Guidance for Rehabilitation and Continuous Training headed by Vice President for Education and Student Affairs – from 01/29/2017 to 28/1/2018. Her Specialization are Computer Science – Artificial Intelligence – Web Mining.